Goals of Workshop. Have basic understanding of. Parallel programming. MPI. OpenMP. Run a few examples of C/C code on Princeton HPC systems. A High Performance Message Passing Library. The Open MPI Project is an open source Message Passing Interface implementation that is developed and maintained by a consortium of academic, research, and industry partners. Home › Forums › Intel® Software Development Products › Clusters and HPC Technology cannot open source file 'mpi.h' cannot open source file 'mpi.h'.

Parallel programs enable users to fully utilize the multi-nodestructure of supercomputing clusters. Message Passing Interface (MPI)is a standard used to allow several different processors on a clusterto communicate with each other. In this tutorial we will be using theIntel C++ Compiler, GCC, IntelMPI, and OpenMPI to create amultiprocessor ‘hello world' program in C++. This tutorial assumesthe user has experience in both the Linux terminal and C++.

Resources:

Setup and 'Hello, World'¶

Begin by logging into the cluster and using ssh to log in to a compilenode. This can be done with the command:

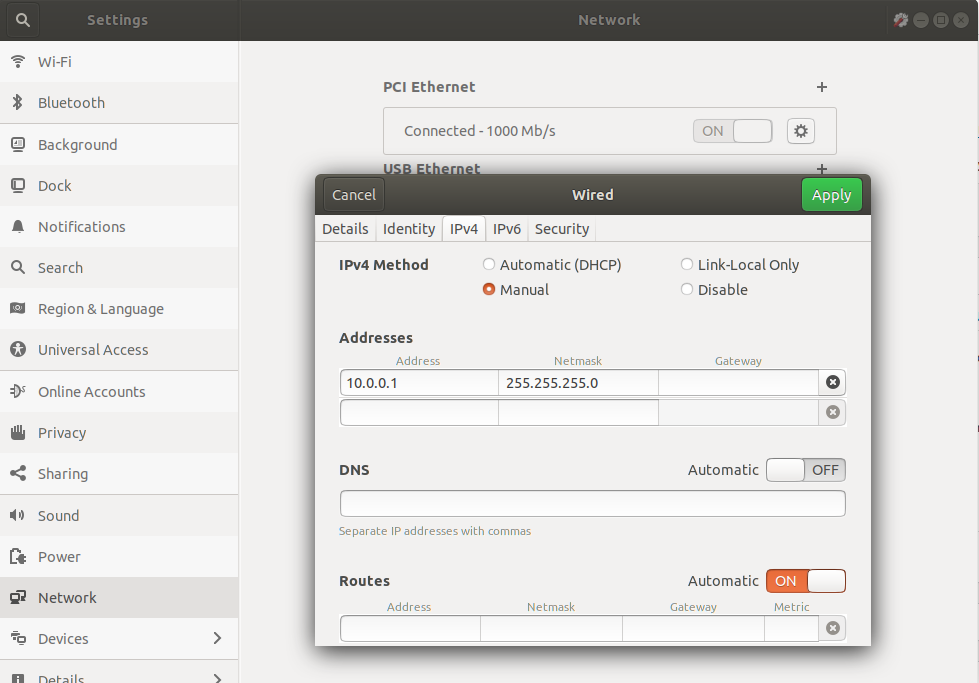

Next we must load MPI into our environment. Begin by loading in yourchoice of C++ compiler and its corresponding MPI library. Use thefollowing commands if using the GNU C++ compiler:

GNU C++ Compiler

Or, use the following commands if you prefer to use the Intel C++compiler:

Intel C++ Compiler

This should prepare your environment with all the necessary tools tocompile and run your MPI code. Let's now begin to construct our C++file. In this tutorial, we will name our code file:hello_world_mpi.cpp

Open hello_world_mpi.cpp and begin by including the C standardlibrary and the MPI library , and byconstructing the main function of the C++ code:

Now let's set up several MPI directives to parallelize our code. Inthis ‘Hello World' tutorial we'll be utilizing the following fourdirectives:

MPI_Init():

MPI_Comm_size():

MPI_Comm_rank():

MPI_Finalize():

These four directives should be enough to get our parallel ‘helloworld' running. Acronis true image 2010 serial key. We will begin by creating twovariables,process_Rank, and size_Of_Cluster, to store an identifier for eachof the parallel processes and the number of processes running in thecluster respectively. We will also implement the MPI_Init functionwhich will initialize the mpi communicator:

Let's now obtain some information about our cluster of processors andprint the information out for the user. We will use the functionsMPI_Comm_size() and MPI_Comm_rank() to obtain the count ofprocesses and the rank of a process respectively:

Lastly let's close the environment using MPI_Finalize():

Now the code is complete and ready to be compiled. Because this is anMPI program, we have to use a specialized compiler. Be sure to use thecorrect command based off of what compiler you have loaded.

OpenMPI

Intel MPI

Maybe the most surprising ones are those which lets you change the background and put you in the middle of the ocean or any other animation.It's really funny and you can use it with your usual IM client: Put on a hat, make your eyelashes grow or turn your eyes into big ones.Add cartoons and pictures, add some text, show the date and time.Don't hesitate, ManyCam is the application you need to have the funniest chat conversations you have ever had. Here you are a really interesting free application that apply effects on your webcam image on the fly.ManyCam allows you apply effects to your webcam images. Manycam for mac.

This will produce an executable we can pass to Summit as a job. Inorder to execute MPI compiled code, a special command must be used:

The flag -np specifies the number of processor that are to be utilizedin execution of the program.

In your job script, load the same compiler and OpenMPIchoices you used above to compile the program, and run the job withslurm to execute the application. Your job script should looksomething like this:

OpenMPI

Intel MPI

It is important to note that on Summit, there is a total of 24 coresper node. For applications that require more than 24 processes, youwill need to request multiple nodes in your job. Ouroutput file should look something like this:

Ref: http://www.dartmouth.edu/~rc/classes/intro_mpi/hello_world_ex.html

MPI Barriers and Synchronization¶

Like many other parallel programming utilities, synchronization is anessential tool in thread safety and ensuring certain sections of codeare handled at certain points. MPI_Barrier is a process lock thatholds each process at a certain line of code until all processes havereached that line in code. MPI_Barrier can be called as such:

To get a handle on barriers, let's modify our 'Hello World' program sothat it prints out each process in order of thread id. Starting withour 'Hello World' code from the previous section, begin by nesting ourprint statement in a loop:

Next, let's implement a conditional statement in the loop to printonly when the loop iteration matches the process rank.

Lastly, implement the barrier function in the loop. This will ensurethat all processes are synchronized when passing through the loop.

The download link for WWE 2014 PPSSPP is given below. https://booksrobadfu1973.mystrikingly.com/blog/download-wwe-2k14-for-psp. Click on the download button to download the game.

Compiling and running this code will result in this output:

Message Passing¶

Message passing is the primary utility in the MPI applicationinterface that allows for processes to communicate with each other. Inthis tutorial, we will learn the basics of message passing between 2processes.

Message passing in MPI is handled by the corresponding functions andtheir arguments:

The arguments are as follows:

MPI_Send

MPI_Recv

Red alert 2 serial number for xwis sign. Once the US military and its leaders take refuge in Canada, Allied intelligence discovers that the Soviets have deployed another psychic device, known as the, in the city of Chicago.Unlike the Psychic Beacon of Washington, the Psychic Amplifier has the power 'to do to the country what the Psychic Beacon did to Washington'.

Let's implement message passing in an example:

Example¶

We will create a two-process process that will pass the number 42 fromone process to another. We will use our 'Hello World' program as astarting point for this program. Let's begin by creating a variable tostore some information.

Now create if and elseif conditionals that specify appropriateprocess to call MPI_Send() and MPI_Recv() functions. In thisexample we want process 1 to send out a message containing the integer42 to process 2.

Lastly we must call MPI_Send() and MPI_Recv(). We will pass the following parameters into thefunctions:

Lets implement these functions in our code:

Compiling and running our code with 2 processes will result in thefollowing output:

Group Operators: Scatter and Gather¶

Group operators are very useful for MPI. They allow for swaths of datato be distributed from a root process to all other availableprocesses, or data from all processes can be collected at oneprocess. These operators can eliminate the need for a surprisingamount of boilerplate code via the use of two functions:

MPI_Scatter:

MPI_Gather:

In order to get a better grasp on these functions, let's go ahead andcreate a program that will utilize the scatter function. Note that thegather function (not shown in the example) works similarly, and isessentially the converse of the scatter function. Further exampleswhich utilize the gather function can be found in the MPI tutorialslisted as resources at the beginning of this document.

Example¶

We will create a program that scatters one element of a data array toeach process. Specifically, this code will scatter the four elementsof an array to four different processes. We will start with a basicC++ main function along with variables to store process rank andnumber of processes.

Now let's setup the MPI environment using MPI_Init , MPI_Comm_size, MPI_Comm_rank , and

MPI_Finaize:

Next let's generate an array named distro_Array to store fournumbers. We will also create a variable called scattered_Data thatwe shall scatter the data to.

Now we will begin the use of group operators. We will use the operatorscatter to distribute distro_Array into scattered_Data . Let'stake a look at the parameters we will use in this function:

Let's see this implemented in code. We will also write a printstatement following the scatter call:

Running this code will print out the four numbers in the distro arrayas four separate numbers each from different processors (note theorder of ranks isn't necessarily sequential):

Mpi.h Dev C 2b 2b Test

I'm trying to build the Salomé engineering simulation tool, and amhaving trouble compiling with OpenMPI. The full text of the error is at

http://lyre.mit.edu/~powell/salome-error . The crux of the problem can

be reproduced by trying to compile a C++ file with:

extern 'C'

{

#include 'mpi.h'

}

At the end of mpi.h, the C++ headers get loaded while in extern C mode,

and the result is a vast list of errors.

It seems odd that one of the cplusplus variables is #defined while in

Lets implement these functions in our code:

Compiling and running our code with 2 processes will result in thefollowing output:

Group Operators: Scatter and Gather¶

Group operators are very useful for MPI. They allow for swaths of datato be distributed from a root process to all other availableprocesses, or data from all processes can be collected at oneprocess. These operators can eliminate the need for a surprisingamount of boilerplate code via the use of two functions:

MPI_Scatter:

MPI_Gather:

In order to get a better grasp on these functions, let's go ahead andcreate a program that will utilize the scatter function. Note that thegather function (not shown in the example) works similarly, and isessentially the converse of the scatter function. Further exampleswhich utilize the gather function can be found in the MPI tutorialslisted as resources at the beginning of this document.

Example¶

We will create a program that scatters one element of a data array toeach process. Specifically, this code will scatter the four elementsof an array to four different processes. We will start with a basicC++ main function along with variables to store process rank andnumber of processes.

Now let's setup the MPI environment using MPI_Init , MPI_Comm_size, MPI_Comm_rank , and

MPI_Finaize:

Next let's generate an array named distro_Array to store fournumbers. We will also create a variable called scattered_Data thatwe shall scatter the data to.

Now we will begin the use of group operators. We will use the operatorscatter to distribute distro_Array into scattered_Data . Let'stake a look at the parameters we will use in this function:

Let's see this implemented in code. We will also write a printstatement following the scatter call:

Running this code will print out the four numbers in the distro arrayas four separate numbers each from different processors (note theorder of ranks isn't necessarily sequential):

Mpi.h Dev C 2b 2b Test

I'm trying to build the Salomé engineering simulation tool, and amhaving trouble compiling with OpenMPI. The full text of the error is at

http://lyre.mit.edu/~powell/salome-error . The crux of the problem can

be reproduced by trying to compile a C++ file with:

extern 'C'

{

#include 'mpi.h'

}

At the end of mpi.h, the C++ headers get loaded while in extern C mode,

and the result is a vast list of errors.

It seems odd that one of the cplusplus variables is #defined while in

extern C mode, but that's what seems to be happening.

I see that it should be possible (and verified that it is possible) to

avoid this by using -DOMPI_SKIP_MPICXX. But something in me says that

Mpi.h Dev C 2b 2b 2c

shouldn't be necessary, that extern C code should only include C headers

Mpi.h Dev C 2b 2b +

and not C++ ones..

Mpi.h Dev C 2b 2b 1b

This is using Debian lenny (testing) with gcc 4.2.1-6.

Thanks,

-Adam

GPG fingerprint: D54D 1AEE B11C CE9B A02B C5DD 526F 01E8 564E E4B6

Engineering consulting with open source tools

http://www.opennovation.com/